When the whole world is trembling from changes and crises the time comes for the consolidation of forces and optimization of resources, paradigm changes and adaptation to new conditions.

In the world of computer hardware you can see a clear trend - the x86 architecture, invented over 40 years ago, is beginning to lose ground in new conditions when the energy and financial efficiency of society dictates new conditions. The mobile device market has long dominated the stationary market and this greatly influences changes in the server hardware. Energy efficiency is starting to be pushed into data centers, servers and processors and those tiny hard-workers which used to be in the shadows and served only routers and washing machines, are now beginning to share space in server racks with their older brothers.

We're talking about ARM architecture. AWS recently launched new instance types on its own Graviton processors. First, they started with general-purpose a1 instances. At sweatcoin, we constantly monitoring new technologies and trying to apply them to reach more and more ambitious targets. Having tried a1, we realized that they were not enough for our purpose.

But now c6g instances are coming into play. They are marketed as a replacement for c5 - the excellent x86-based instances we use. Such a desperate and bold step could not pass our attention and we decided to try how our backend could work on the new architecture.

What's stopping RoR from running on ARM?

Our application is written in Ruby on Rails. This is mainly an API, but there is also a graphical part. Compiling assets requires javascript-runtime, which usually runs with gems therubyracer or mini_racer. Ruby supports the ARM architecture, but most of the well-known Ruby on Rails projects do not.

The point is that gems therubyracer or mini_racer require gem libv8 which provides the native libraries of the V8 project. They are required to work with javascript in your rails app. By default libv8 downloads precompiled libraries for your architecture but our arm64 doesn't have them. In this case libv8 downloads the sources and tries to compile them. This is where all the things go wrong.

We've simplified our Dockerfile a bit but in general building the project is no different from any other Ruby on Rails:

FROM ruby:2.5.8

RUN apt-get update && apt-get install -y \

libproj-dev \

build-essential zlib1g-dev libncurses5-dev libgdbm-dev libnss3-dev libssl-dev libreadline-dev libffi-dev wget libev-dev \

&& rm -rf /var/lib/apt/lists/*

ENV LANG C.UTF-8

ENV RAILS_ENV production

ENV RAILS_SERVE_STATIC_FILES true

ENV BUNDLE_DISABLE_EXEC_LOAD=true

RUN mkdir /app

WORKDIR /app

ADD Gemfile* ./

ARG BUNDLE_JOBS=1

RUN bundle install --jobs $BUNDLE_JOBS --without development test && \

rm -rf Gemfile*

Everything goes well at first, but the last step shows up installing the lib8v gem:

Traceback (most recent call last):

File "/home/ubuntu/libv8/vendor/depot_tools/gn.py", line 38, in <module>

sys.exit(main(sys.argv))

File "/home/ubuntu/libv8/vendor/depot_tools/gn.py", line 33, in main

return subprocess.call([gn_path] + args[1:])

File "/usr/lib/python2.7/subprocess.py", line 522, in call

return Popen(*popenargs, **kwargs).wait()

File "/usr/lib/python2.7/subprocess.py", line 710, in __init__

errread, errwrite)

File "/usr/lib/python2.7/subprocess.py", line 1335, in _execute_child

raise child_exception

OSError: [Errno 8] Exec format error

No prebuilt ninja binary was found for this system.

Try building your own binary by doing:

cd ~

git clone https://github.com/ninja-build/ninja.git -b v1.8.2

cd ninja && ./configure.py --bootstrap

Then add ~/ninja/ to your PATH.At first it is difficult to understand what is at stake but let's try to solve this riddle.

gn - Google's build configuration utility

ninja - project build and compile utility

Let's clone the libv8 code into a separate directory and try to walk through the installation steps manually:

git clone --recursive git://github.com/rubyjs/libv8.git

cd libv8

bundle install

bundle exec rake compileOkay, so we're reproduced our error. But if try to look at build script we could see the steps that generating this errors:

def generate_gn_args

system "gn gen out.gn/libv8 --args='#{gn_args}'"

end

...

def build_libv8!

setup_python!

setup_build_deps!

Dir.chdir(File.expand_path('../../../vendor/v8', __FILE__)) do

puts 'Beginning compilation. This will take some time.'

generate_gn_args

system 'ninja -v -C out.gn/libv8 v8_monolith'

end

return $?.exitstatus

endWe can cleary see two system opertators system "gn gen out.gn/libv8 --args='#{gn_args}'" and system 'ninja -v -C out.gn/libv8 v8_monolith' trying to run executables from vendor/depot_tools and vendor/v8/buildtools/linux64/. But they are compiled for x86.

Lets's try to fix it!

Fast & Furious 42 – Problem Solving

We decided to just recompile the binaries for ARM. Thanks to the ubuntu repository, we already have a ninja. Let's just copy it to depot_tools:

apt-get update && apt-get install ninja-build

cp /usr/bin/ninja vendor/depot_tools/ninja

We also have gn tool. Let' compile it ad move to v8/buildtools:

git clone https://gn.googlesource.com/gn

cd gn

sed -i -e "s/-Wl,--icf=all//" build/gen.py

python build/gen.py && ninja -C out

cp /gn/out/gn vendor/v8/buildtools/linux64/

Also we need clang for ARM:

wget -q https://github.com/llvm/llvm-project/releases/download/llvmorg-10.0.0/clang+llvm-10.0.0-aarch64-linux-gnu.tar.xz

tar xf clang+llvm-10.0.0-aarch64-linux-gnu.tar.xz

rm -rf vendor/v8/third_party/llvm-build/Release+Asserts/bin

rm -rf vendor/v8/third_party/llvm-build/Release+Asserts/lib

mv clang+llvm-10.0.0-aarch64-linux-gnu/* vendor/v8/third_party/llvm-build/Release+Asserts

rm -rf clang+llvm-10.0.0-aarch64-linux-gnu.tar.xz clang+llvm-10.0.0-aarch64-linux-gnu

So after all manipulations we're finally ready to build & install v8 libraries in our gem:

bundle exec rake compile

bundle exec rake binary

gem install /gn/libv8/pkg/libv8-8.4.255.0-aarch64-linux.gem

We've also put together a complete Dockerfile with all the commands above:

FROM ruby:2.5.8

RUN apt-get update && apt-get install -y \

libgeos-dev \

libproj-dev \

libjemalloc-dev \

build-essential zlib1g-dev libncurses5-dev libgdbm-dev libnss3-dev libssl-dev libreadline-dev libffi-dev wget libev-dev ninja-build clang libncurses-dev libtinfo5 \

&& rm -rf /var/lib/apt/lists/*

RUN gem update --system && gem install bundler -v "2.1.4"

ENV LANG C.UTF-8

ENV RAILS_ENV production

ENV RAILS_SERVE_STATIC_FILES true

ENV LD_PRELOAD /usr/lib/aarch64-linux-gnu/libjemalloc.so

ENV BUNDLE_DISABLE_EXEC_LOAD true

ENV SHELL /bin/bash

RUN git clone https://gn.googlesource.com/gn

WORKDIR /gn

RUN sed -i -e "s/-Wl,--icf=all//" build/gen.py && python build/gen.py && ninja -C out

RUN git clone --recursive git://github.com/rubyjs/libv8.git

WORKDIR /gn/libv8

RUN bundle install

RUN bundle exec rake compile; exit 0

RUN wget -q https://github.com/llvm/llvm-project/releases/download/llvmorg-10.0.0/clang+llvm-10.0.0-aarch64-linux-gnu.tar.xz && \

tar xf clang+llvm-10.0.0-aarch64-linux-gnu.tar.xz && \

rm -rf vendor/v8/third_party/llvm-build/Release+Asserts/bin && \

rm -rf vendor/v8/third_party/llvm-build/Release+Asserts/lib

RUN mv clang+llvm-10.0.0-aarch64-linux-gnu/* vendor/v8/third_party/llvm-build/Release+Asserts && \

rm -rf clang+llvm-10.0.0-aarch64-linux-gnu.tar.xz clang+llvm-10.0.0-aarch64-linux-gnu

RUN cp /gn/out/gn vendor/v8/buildtools/linux64/ && \

cp /usr/bin/ninja vendor/depot_tools/ninja

RUN bundle exec rake compile

RUN bundle exec rake binary

RUN gem install /gn/libv8/pkg/libv8-8.4.255.0-aarch64-linux.gem

WORKDIR /app

ADD Gemfile* ./

ARG BUNDLE_JOBS=1

RUN bundle install --jobs $BUNDLE_JOBS --without development test && \

rm -rf Gemfile*

RUN rm -rf /gn

Performance & conclusion

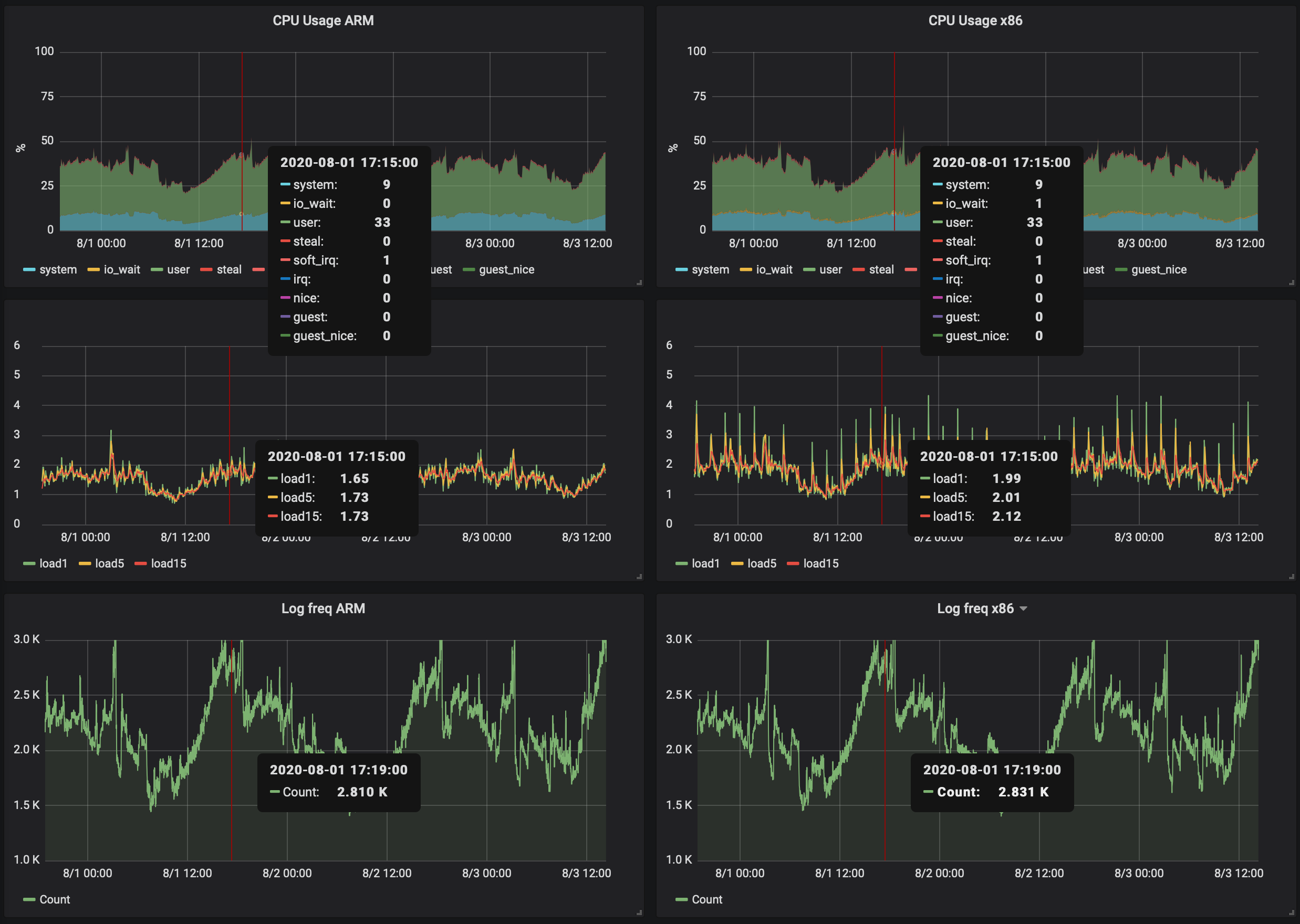

Finally it's time to test the performance of the code on the new architecture. We connected our application to a load balancer and measured the number of requests per minute, load average, and processor load. For comparison, we created the same conditions for the x86 architecture on the c5 instance:

You can see from the charts that the c6g machine is in no way inferior to the c5, and sometimes even slightly surpasses it in performance. We can say that the instances on the ARM architecture are ready for production and you can carefully start injecting them.

We at sweatcoin are also updating our fleet but there are still many unanswered questions. We have parts of the code written in crystal and optimized for x86, we still have to fight with this but let's leave that for another article!